Beginners Guide to Linear Regression For ML.

It is the most basic algorithm in machine learning. Regression means it is required to predict continuous values of output.

Ex:

1. What is the market value of the house?

2. What is the price of the product?

Basic terms used:

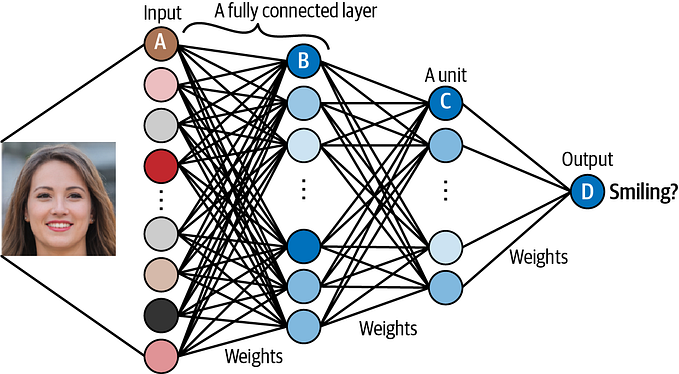

Features: These are independent variables used in any dataset represented x1,x2,x3,….,xn for n features.

Target: The dependent variable whose value is dependent on the independent variable and is represented by ‘y’.

Function/Hypothesis : This is the equation that is used to determine the output “ y = m1x1 + m2x2 + m3x3 +……+mnxn + c” <br>

Intercept: Here c is the intercept of the line. we usually include it in the same os y= mx+c where the m(n+1) is taken as c and x is taken to be ‘1’ in the ‘n+1’th term.

Training Data (X_train, Y_train): This is given to the machine to learn or get trained on the same function.

Getting trained means the machine tries to form an equation through which it can obtain Y_train values from X_train.

Testing Data(X_test, Y_test): Once our model has finished training. To get an idea of how well it performs, we use the testing data.

The machine is given the value X_test and predicts Y_predict and Y_predict is compared with Y_test to know the performance.

Note: y = m1x1+m2x2+m3x3+…….+mnxn+c

m1,m2,m3, mn are called parameters and c is the intercept. ’y’ is the binary dependent on the features like x1,x2,x3.

Finding out the feature that has maximum effect on the output.

The more ‘y’ is dependent on a particular x more will be the value of that corresponding ‘m’. We will get to know which feature is more important by varying the value of ‘m’ one at a time to see how much it affects ‘y’.

To find m and c:

We will be using our training data to find the most suitable values for m and c so that when an ‘x’ is given we get ‘y’.

The core idea is to obtain a line that fits the data. The best fit line is considered to be the line for which the error between the predicted values and the observed values is minimum. It is called a regression line.

The errors obtained from the output of the predicted value are called residuals.

Types of Linear Regression

Linear regression can be further divided into two types of the algorithm:

1. Simple Linear Regression:

If a single independent variable is used to predict the value of a numerical dependent variable, then such a Linear Regression algorithm is called Simple Linear Regression.

2. Multiple Linear regression:

If more than one independent variable is used to predict the value of a numerical dependent variable, then such a Linear Regression algorithm is called Multiple Linear Regression.

Simple Linear Regression

It models the relationship between the dependent variable and independent variable.

Let's assume only one feature in the dataset i.e ‘x’.

y = mx + c

If we scatter the points (x,y) in a graph then linear regression tries to find a value for m and c such that the error of each data point (x,y_actual) is minimum when compared with (x,y_predict).

Here it is difficult to find out which line is the best fit just by looking at the different lines.

So our algorithm finds out them and b for the line of best fit by calculating the combined error function and minimizing it.

There can be three ways of calculating the error function:

- Sum of residuals: —

This is usually not used since it might result in cancelling out the positive and negative errors.

2. Sum of the absolute value of residuals: —

Taking absolute value would prevent the cancellation of positive and negative errors.

3. Sum of square of residuals:—

This is the method mostly used in practice since here we penalize higher error values much more as compared to smaller ones so that there is a significant difference between making big errors and small errors, which makes it easy to differentiate and select the best fit line.

Note: Here are the values of Y predicted by our machine for some m or c.

Linear Regression Using Sklearn.

The process takes place in the following steps:

- Loading the Data

- Splitting the Data

- Generate The Model

- Evaluate The accuracy

- Analyzing Through Graph

Loading the data

Here we will use a dummy data set.

import numpy as np #importing numpy library

data = np.loadtxt(r"C:\Users\Ananthapadmanabha\Desktop\blogs\Untitled Folder\data.csv", delimiter = ",")

X = data[:, 0].reshape(-1, 1)

#making both X and Y of the same dimension to form a dataframe

Y = data[:, 1]

#Selecting the data for the all the rows and the 1 columnSplitting the Data

from sklearn() we import model_selection() submodule and use the train_test_split() function to divide the data into 4 parts [X_train, X_test, Y_train, Y_test].

from sklearn import model_selection

X_train, X_test, Y_train, Y_test = model_selection.train_test_split(X, Y, test_size = 0.3)

#test_size is used to assign a 0.3 part of the total dataset for training and that is also choosen randomly to prevent and malpractice by the algorithm.Generating the model

Import the linear_model from sklearn to get the algorithm LinearRegression().

Fitting: fit() function is used where the model will try to find the value of ‘m’ and ‘c’ to get the value of the dependent value of ‘y’ from the independent value of ‘x’.

Predicting the y value: predict() is used to get the value of the y_predict from the X_test saved for testing of the data.

from sklearn import linear_model

algo = linear_model.LinearRegression()

algo.fit(X_train, Y_train)

y_predict = algo.predict(X_test)Evaluation

The y_predict we got is compared with the Y_test to see how accurately the software predicts the output. Mean_squared_error() is one of the most used error detection mechanisms.

from sklearn.metrics import mean_squared_error

mse_self_implement = mean_squared_error(Y_test, y_predict)

coeffient = algo.coef_

intercept = algo.intercept_

print(mse_self_implement,intercept,coeffient[0])

#printing the mean_squared_error and the intercept and the coeffient of the equation found by training.Analyzing Through Graph

We scatter the test data i.e X_test and Y_test to get different points and compare them with the y_predict that we got from the X_test .

import matplotlib.pyplot as plt

plt.scatter(X_test, Y_test)

plt.plot(X_test, y_predict)

plt.xlabel("X test")

plt.ylabel("Predicted y (Line) / Test y (Scatter)")

plt.show()

The Jupyter Notebook for the complete code with an explanation is here.

The dataset link is here!

In the next blog, we will be discussing how to build a recursion algorithm from scratch without using the sklearn for a better understanding of the math behind it.

Follow me to learn Machine Learning in the next 100 days! Happy Learning.

Enquiries: anantha.kattanij@gmail.com

(would love to hear your feedback)!!